示例简介

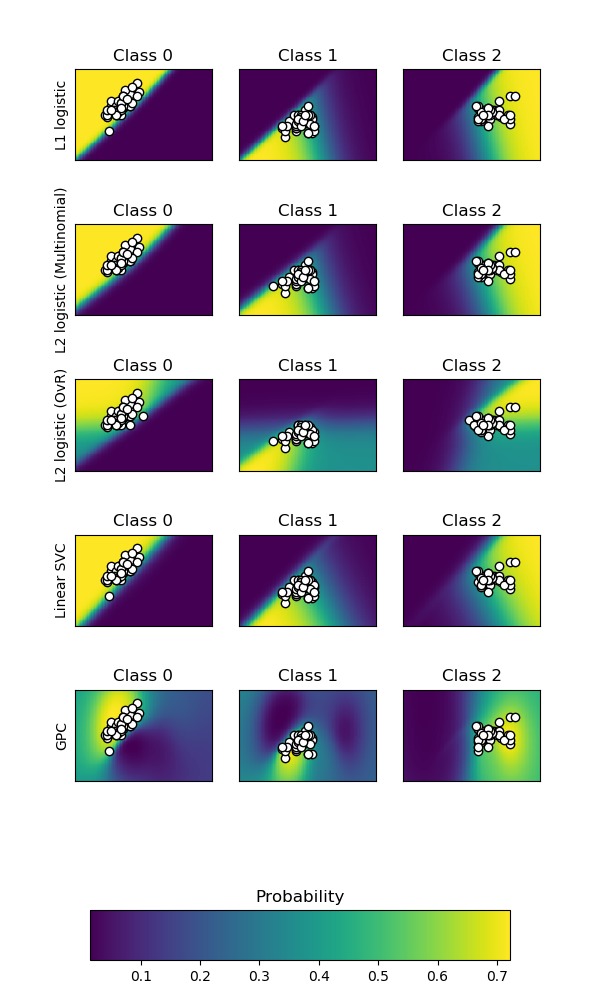

本示例介绍如何绘制不同分类器的分类概率。我们在拥有3个类别的数据集上,使用如下分类模型进行多分类:

- 支持向量分类器(SVM Classification, 简称SVC)

- 线性SVC在默认情况下不是概率分类器,但在此示例中启用了内置校准选项(

probability=True)

- 线性SVC在默认情况下不是概率分类器,但在此示例中启用了内置校准选项(

- 逻辑回归(LogisticRegression,简称LR),尝试了3种参数配置:

- 采用L1正则化

- 采用L2正则化和One-Vs-Rest模式,One-Vs-Rest简称OvR,即做多分类时,将1个类的样本作为正例,其他类的样本作为负例。

- 使用One-Vs-Rest的逻辑回归不是开箱即用的多类分类器。在下文中可用看到,与其他模型估计量相比,在将第2类和第3类分离时会有一些问题。

- 采用L2正则化和multinomial模式,multinomial即直接做多分类。

- 高斯过程分类(GaussianProcessClassifier,简称GPC)。

代码实现[Python]

# -*- coding: utf-8 -*-

print(__doc__)

# Author: Alexandre Gramfort

# License: BSD 3 clause

import matplotlib.pyplot as plt

import numpy as np

from sklearn.metrics import accuracy_score

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.gaussian_process import GaussianProcessClassifier

from sklearn.gaussian_process.kernels import RBF

from sklearn import datasets

iris = datasets.load_iris()

X = iris.data[:, 0:2] # we only take the first two features for visualization

y = iris.target

n_features = X.shape[1]

C = 10

kernel = 1.0 * RBF([1.0, 1.0]) # for GPC

# 创建5个不同的分类器.

classifiers = {

'L1 logistic': LogisticRegression(C=C, penalty='l1',

solver='saga',

multi_class='multinomial',

max_iter=10000),

'L2 logistic (Multinomial)': LogisticRegression(C=C, penalty='l2',

solver='saga',

multi_class='multinomial',

max_iter=10000),

'L2 logistic (OvR)': LogisticRegression(C=C, penalty='l2',

solver='saga',

multi_class='ovr',

max_iter=10000),

'Linear SVC': SVC(kernel='linear', C=C, probability=True,

random_state=0),

'GPC': GaussianProcessClassifier(kernel)

}

n_classifiers = len(classifiers)

plt.figure(figsize=(3 * 2, n_classifiers * 2))

plt.subplots_adjust(bottom=.2, top=.95)

xx = np.linspace(3, 9, 100)

yy = np.linspace(1, 5, 100).T

xx, yy = np.meshgrid(xx, yy)

Xfull = np.c_[xx.ravel(), yy.ravel()]

for index, (name, classifier) in enumerate(classifiers.items()):

classifier.fit(X, y)

y_pred = classifier.predict(X)

accuracy = accuracy_score(y, y_pred)

print("Accuracy (train) for %s: %0.1f%% " % (name, accuracy * 100))

# View probabilities:

probas = classifier.predict_proba(Xfull)

n_classes = np.unique(y_pred).size

for k in range(n_classes):

plt.subplot(n_classifiers, n_classes, index * n_classes + k + 1)

plt.title("Class %d" % k)

if k == 0:

plt.ylabel(name)

imshow_handle = plt.imshow(probas[:, k].reshape((100, 100)),

extent=(3, 9, 1, 5), origin='lower')

plt.xticks(())

plt.yticks(())

idx = (y_pred == k)

if idx.any():

plt.scatter(X[idx, 0], X[idx, 1], marker='o', c='w', edgecolor='k')

ax = plt.axes([0.15, 0.04, 0.7, 0.05])

plt.title("Probability")

plt.colorbar(imshow_handle, cax=ax, orientation='horizontal')

plt.show()

代码执行

代码运行时间大约:0分1.409秒。

运行代码输出的文本内容如下,如上文所述OvR的LR不是天然的多分类器,所以效果相对较差。

Accuracy (train) for L1 logistic: 83.3% Accuracy (train) for L2 logistic (Multinomial): 82.7% Accuracy (train) for L2 logistic (OvR): 79.3% Accuracy (train) for Linear SVC: 82.0% Accuracy (train) for GPC: 82.7%

运行代码输出的图片内容如下,图中白色圆点表示样本,背景色的深浅表示概率的强度(见最后一张图,颜色越浅(黄)概率越大,颜色越深(深蓝)概率越小)。我们再次可以看到,对于OvR的逻辑回归,效果不太好——Class 1和Class 2的样本对应的概率强度比较低!

源码下载

- Python版源码文件: plot_classification_probability.py

- Jupyter Notebook版源码文件: plot_classification_probability.ipynb